Sometime in the 23rd century, a Starfleet Academy instructor leads a class of red shirts across the terrain of an alien world and speaks with earnest gravitas about the greatest technological innovation of the 21st century.

“The Machine,” he begins as holographic diagrams and video windows pop up above a bubbling lagoon. “It changed the basic architecture of computing, using a massive pool of memory at the centre of everything. And by doing so, it changed the world.”

Andrew Wheeler, vice-president and deputy director at HP Labs and an HPE fellow, faces the tall order of moving today’s real-world technology to the lofty new paradigm of computing imagined in this Star Trek-branded spot from HPE, released in June as part of a partnership with summer blockbuster Star Trek Beyond. While the commercial’s claims may seem grandiose, Wheeler doesn’t shy away from his team’s ambitions and the need for a new architecture for computing.

“The Machine could solve problems previously unimaginable given real-time restraints,” he says. “Today’s conventional data centres won’t be able to keep up with the exponential growth of demand.”

https://www.youtube.com/watch?v=y3sHh6CsN7c

Facing down the problems of exponential growth

Much like Moore’s Law predicted that the number of transistors per square inch of integrated circuits would double every year, the growth of cloud computing has also been exponential. As more organizations turn to infrastructure as a service and more of the world is connected to the Internet, data centres are being built and acquired at a staggering pace by service providers to meet the demand.

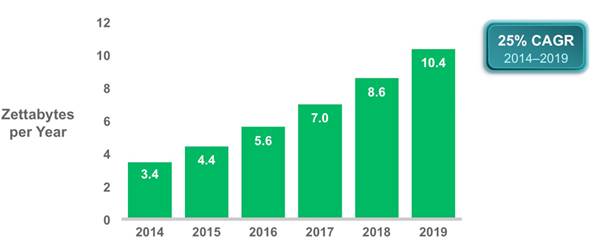

Over the next five years that exponential growth promises to test the limits of current storage and processing technology available. By 2019, data centre traffic will reach 10.4 zettabytes, compared to the 3.4 zettabytes seen in 2014, predicts the Cisco Global Cloud Index published in April.

Feeding into the rapid growth of data centres is the emergence of the Internet of Things, connected devices being embedded in machinery, vehicles, and textiles that are creating even more data points that must be collected and crunched by big data analytics software solutions. According to Cisco, North America had 7.3 device connections per users in 2014 – the highest of any region – and will see a rise to 13.6 connections per users in 2019. From a data perspective, by 2019 more than 500 zettabytes will be generated by connected devices, up from 135 zettabytes in 2014.

Then there’s the power requirements. A study at the University of Leeds in the U.K. shows data centres require double the amount of electricity every four years. Considering that last year global datacentre power requirements already exceeded all of the power consumption in the U.K., the research suggests that to avoid a power crisis in the future, the world will have to go on a planned Internet diet.

“When you look at the massive amount of data that’s going to be collected and the idea of aggregating that idea in an IoT-enabled world and scale that up into large datasets, today the fastest way to do that is with high-performance computers and in-memory databases,” says Crawford Del Prete, chief research officers at IT consultancy firm IDC. “But this still has some significant limitations to speed, and the power required.”

Rearchitecting computers to change the equation

HP Labs’ solution to the problem of exponential growth is, in essence, to redesign the fundamental architecture of computing to provide the speed needed at a much lower power requirement. After all, computers haven’t been redesigned for more than 50 years now in terms of core architecture. Rather than torquing up the horsepower of a classic hot rod by putting in a bigger engine, HP wants to redesign everything that’s under the hood and make a Tesla.

“Instead of cramming more cores into processors, we are starting with a memory-centric view first,” Wheeler says. “We can create this central memory pool that can act as some level of main memory and your permanent storage.”

https://www.youtube.com/watch?v=NZ_rbeBy-ms

HPE has developed a new type of storage medium, Memrister, to serve memory, cache, and storage functions simultaneously. Called “universal memory,” this memory pool would be connected using photonic fabric (think fibre optic cable) instead of copper wiring, accessed with light instead of electricity. Using this model, the appropriate amount of CPU can be assigned per workload.

HPE says this model of computing is more elegant than current architecture because it eliminates the need to hand off data between the various layers of storage, traveling through communications buses each requiring software to manage the flow. With The Machine, all of that can be cut out and data accessed instantly.

“HPE is putting on the table a different way to think about how data is handled in the computer,” Del Prete says.

Pushing industry towards the paradigm shift

“Build it and they will come” worked for Kevin Costner, but Wheeler knows he’s not starring in Field of Dreams as his team develops The Machine. While finding ways to demonstrate immediate efficiency gains by applying the new architecture to existing systems, HPE is also engaging industry to imagine solving problems they wouldn’t even consider attempting with current technology.

“I want to get to the end goal of The Machine,” he says. “But I know how business works. We have to roll out some parts of this infrastructure as improvements to existing infrastructure.”

First announced as a project in 2014, HPE has since developed the new Linux-based OS required for The Machine in an open source model, making a repository available on GitHub. It has been developing code and testing algorithms for The Machine using its Superdome X servers, designed for high-performance computing and providing a vast amount of in-memory compute capability.

HPE says that its architecture work led to its own reduction of the servers supporting its website, HP.com (this was before the company split), from 25 server racks to three. That supports 300 million hits a day on 720 watts, the company reported in a 2014 blog post.

But HPE wants to demonstrate The Machine is about more than further efficiency in computer and power consumption. Wheeler describes a recent contest HPE organized with a professional airlines consortium, using a huge dataset detailing flight operations, asking some airlines to apply graph analytics to solve problems created by unexpected changes in flight schedules.

Imagine a pilot is able to land a flight 10 minutes early, only to find their intended gate is still busy, Wheeler says. Even though many other spare gates may be available, it’s likely the plane will have to sit on the tarmac and wait because it’s too difficult to change all the details related to that flight to another gate – the crew, the baggage, the maintenance, and so on.

“There’s not a single type of server that can help them optimize things like that,” he says. “With an architecture like The Machine, you could be tracking all that information in realtime.”

With processing power more efficient and storage much cheaper in the future, businesses will begin automating many operational processes, says IDC’s Del Prete, making 20 per cent of them self-healing without any need for human intervention by 2022.

“Companies have to get a productivity boost from technology to become more efficient,” he says. “You have to be addressing a really hard problem of that’s going to deliver huge amounts of value.”

Other hardware vendors in the industry are going about this by acquiring artificial intelligence innovators, retooling power architectures, and even otherwise redeveloping semi-conductors. But HPE is the only one that’s rearchitecting the input/output architecture with a unified memory approach.

Before the end of the year, HPE plans to have a full-scale prototype up and running with the unified memory and software architecture in place. But it won’t have the full-realized performance yet, Wheeler says. Towards the end of the decade, he hopes to see a full-blown version of The Machine completed.

“You don’t just throw the Machine out there and say here you go,” he says. “There’s a lot of work that leads up to taking advantage of that.”

The prototype we see later this year will be looked at by industry analysts as a new box in HPE’s converged hardware portfolio, still a far cry from the world-changing innovation depicted in the Star Trek commercial. But as Wheeler would tell you, you have to show people what’s possible before you get them to eliminate what they consider impossible.