Western Digital today announced a new lineup of memory extension drives.

The Ultrastar DC ME200 memory extension drive uses flash memory and attaches via U.2 ports or HHHL (half-height, half-length) AIC (add-in card). As its name implies, it’s not used for data storage, but rather as a means to increase a system’s RAM capacity without investing in high-density DIMM modules.

Onboard, the Ultrastar DC ME200 uses 15nm MLC NAND. Western Digital rates every drive to have an endurance of 17 drive writes per drive, which is equivalent to 19 petabyte writes for the 1TB model, 38PB for the 2TB model, and 78PB for the 4TB model.

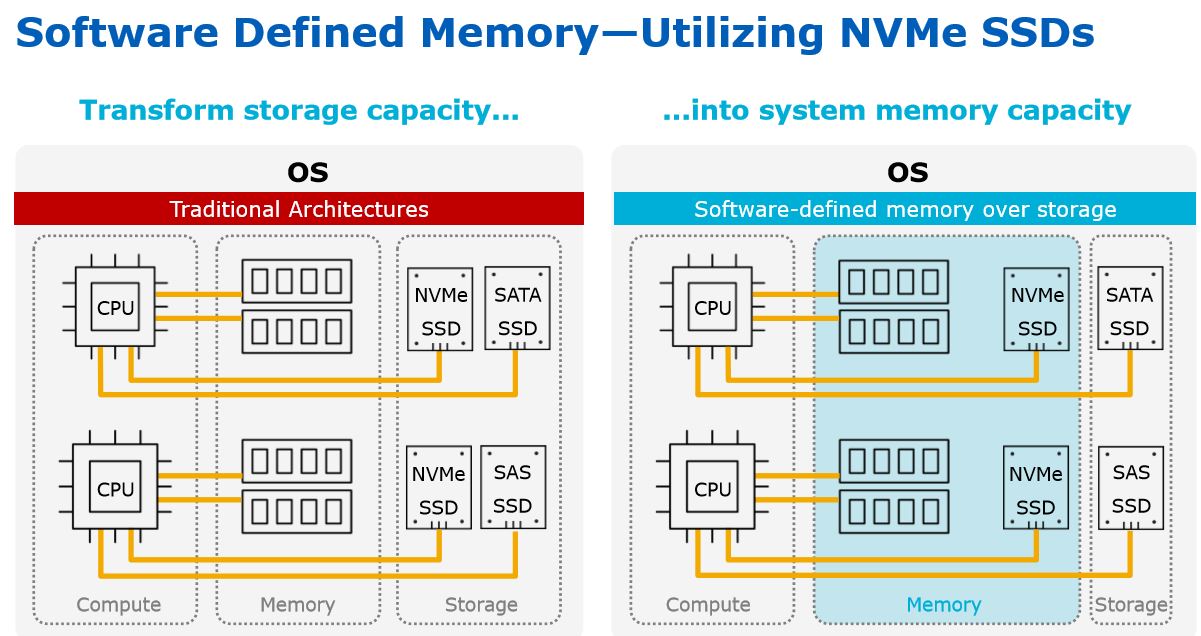

Using a technology called software-defined memory, servers are able to create a virtual pool of memory using flash storage. Benefits of software-defined memory include higher DRAM density per system, significantly lower cost compared to DRAM, and equal power efficiency to server-grade SSDs.

But that doesn’t mean it replaces DRAM entirely, it still needs some to operate. Western Digital recommends a 1:8 DRAM to DC ME200 pairing ratio. In other words, 8GB of Ultrastar DC ME200 for every 1GB of system RAM.

The Ultrastar DC ME200 uses an intelligent prefetch algorithm to predictively load data from the extension drive into DRAM before they’re needed. This counteracts some of the latency issues associated with using flash memory. Western Digital promises up to 94 per cent of DRAM performance in certain workloads.

Ultrastar DC ME200 is ready for production orders today in 1TB, 2TB, and 4TB capacity options.

Why software-defined memory?

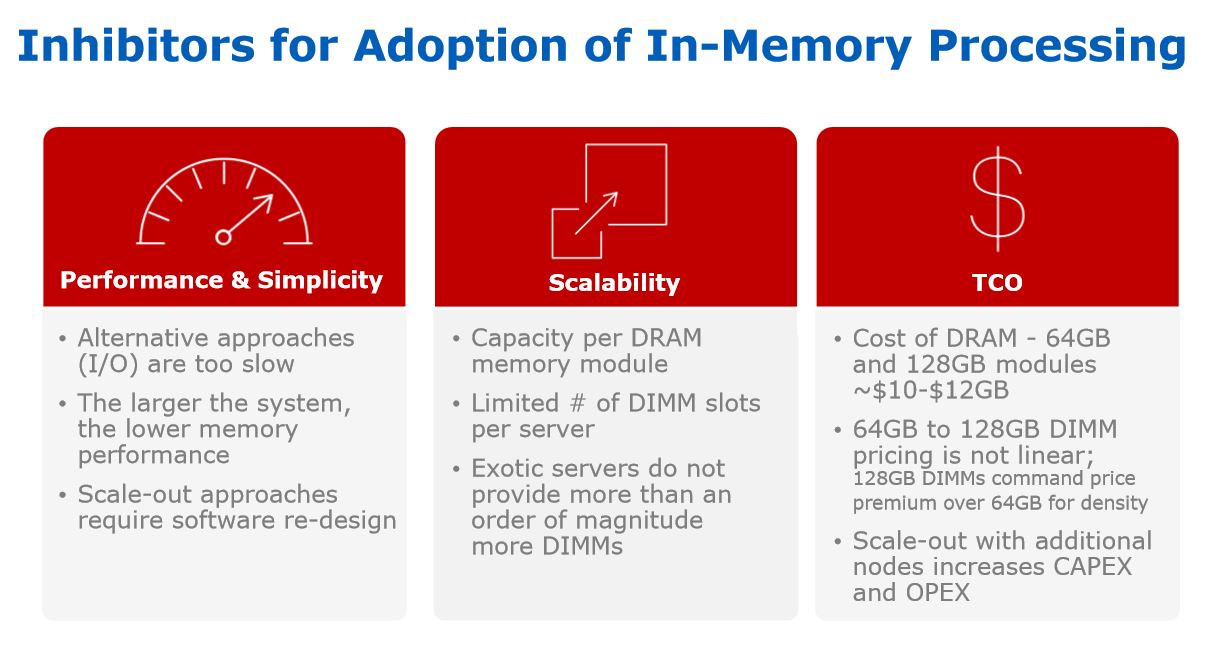

Server computer’s appetite for RAM is voracious. Enterprise workloads such as virtual machines and real-time analytics all require in-memory processing to operate effectively. While not new, software-defined memory is becoming increasingly viable as a solution to address the scalability and cost issues of increasingly RAM-intensive server workloads. These range from containers, embedded virtual machines, and cloud services.

Larger server systems are often choked by the RAM capacity constraint. Server CPUs have grown tremendously in core count. The Intel Xeon E7-8890 V4, for example, has 28 cores or 48 threads. When they’re fully loaded, there often isn’t enough addressable RAM to supply to the workload they’re running.

Traditional servers and server clusters are limited by the number of available DIMM slots on the motherboard. When all DIMM slots are populated, the only ways to increase RAM capacity is to invest in extra server clusters, or purchase higher density RAM modules. In either case, it drives up cost and doesn’t make efficient use of existing hardware.

In addition, the cost of DRAM is still very high. What’s worse is that their cost doesn’t scale linearly to density due to factors such as supply shortages. Currently, 64GB and 128GB DIMM modules cost $10 to $12 per gigabyte.

While the number of DIMM slots is usually limited, server-grade CPUs have many PCIe lanes for connecting high-speed components such as SSDs. For example, the AMD Epyc 7218 CPU has 128 PCIe lanes. On a dual-chip system, that totals to 256.

This is where software-defined memory comes in. It unifies SSD storage into the system’s memory pool, leveraging on the massive amounts of fast NVMe SSDs servers carry. Because more SSDs can fit into a single server, density can be increased and physical footprint decreased. SSDs are also much more affordable than DRAM.

What’s next?

With all these hard-to-tackle issues, the industry is turning to persistent memory for a solution. But SSDs, while blazing fast by consumer standards, are still far too slow to compete with DRAM in enterprise environments. This led to the creation of Storage-Class Memory (SCM), also known as persistent memory, that blends together aspects of both memory and flash storage. Intel, SanDisk (now owned by Western Digital), and HP have all joined in developing SCM solutions.