During the Nvidia GPU Technology Conference today, Nvidia CEO Jensen Huang revealed the Nvidia EGX A100 converged accelerator powered by the company’s next-generation Ampere graphics processing unit (GPU) architecture.

Though the Ampere GPU architecture is still shrouded in mystery, it has been confirmed that it will be built using TSMC’s 7nm transistors. Ampere is considered to be a major architectural redesign from the current Volta architecture.

Ampere’s first product, the A100, will strictly target heavy workstation workloads such as simulation, rendering, machine learning, and cloud virtualizations. The particular GPU on the A100 consists of 54 billion transistors and new features like new security engine, third-gen Tensor cores with new Floating Point 32 precision. The A100 also integrates the Nvidia Mellanox CoonnectX-6DX network adapter onboard.

“By installing the EGX into a standard x86 server, you turn it into a hyper-converged, secure, cloud-native, AI powerhouse, it’s basically an entire cloud data centre in one box,” said Huang.

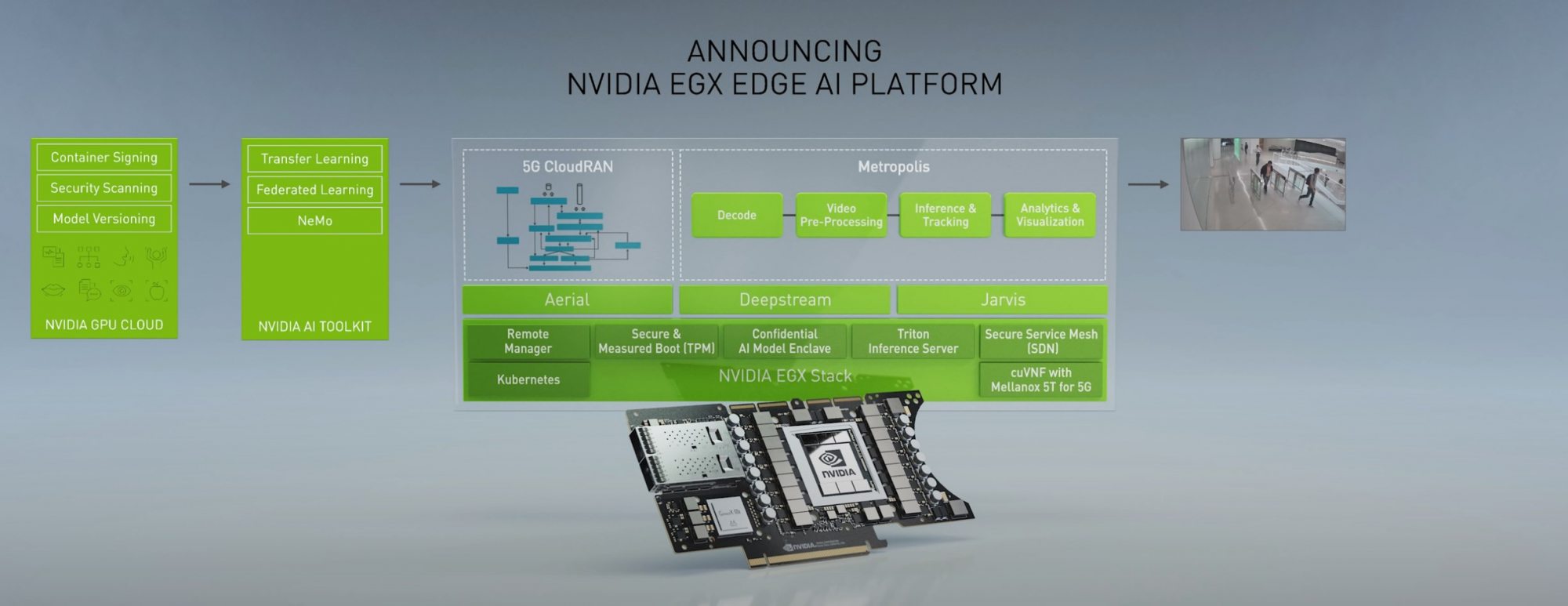

Complementing the EGX A100 is Nvidia’s EGX cloud-native AI platform with a focus on remote management and secure data processing.

The A100 is also designed with scalability in mind. With the multi-instance GPU (MIG) feature, a single A100 can be partitioned into up to seven independent GPUs, each with its own dedicated resources. Or, several A100 servers can act as a single GPU by connecting through Nvidia’s NVLink.

On its product page, Nvidia claims that the A100 can deliver up to six times higher performance for training and seven times higher performance for inference compared to Volta, Nvidia’s previous architecture.

The Nvidia EGX A100 is in full production and shipping to customers worldwide. Expected system integrators include Amazon Web Services (AWS), Cisco, Dell Technologies, Google Cloud, Microsoft Azure among others. More details on the Ampere architecture will be revealed on Tuesday, May 19, at Nvidia’s GTC virtual event.