Intel today announced its 3rd gen “Cooper Lake” Xeon processors with increased AI capabilities.

The 3rd gen Intel Xeon processors comprise 11 SKUs ranging between 16 to 28 cores and 32 to 56 threads. They carry up to 38.5MB of cache and support ECC DDR4 RAM of up to at 3200MHz.

In terms of core architecture, Cooper Lake is based on Intel’s 14nm node and is an iterative optimization of the Cascade Lake, Intel’s 2nd gen Xeon processors. With improved optimized manufacturing and design, Cooper Lake sees a frequency bump compared to last gen. For example, the newly introduced Xeon Platinum 8380H processor increases the clock speed by 300MHz on both single-core base and boost frequencies, albeit at the cost of raising the TDP from 205W to 250W. But even when comparing the Xeon Platinum 8280 to the Xeon Platinum 8376H, a SKU with 205W TDP, the Xeon Platinum 8376H’s single-core turbo boost is still 300MHz higher.

The launch lineup is divided by their suffix to indicate their memory support. SKUs “L” suffix support up to 4.5TB of memory per socket, whereas SKUs without “L” support up to 1.12TB of memory per socket.

While the “L” suffix marks SKUs with high memory compatibility, all 3rd gen Intel processors have received the “H” suffix. In consumer desktop processors, the “H” suffix designates high-performance graphics, but because Intel Xeon datacentre processors typically omit integrated graphics, it’s not clear what the “H” suffix denotes here.

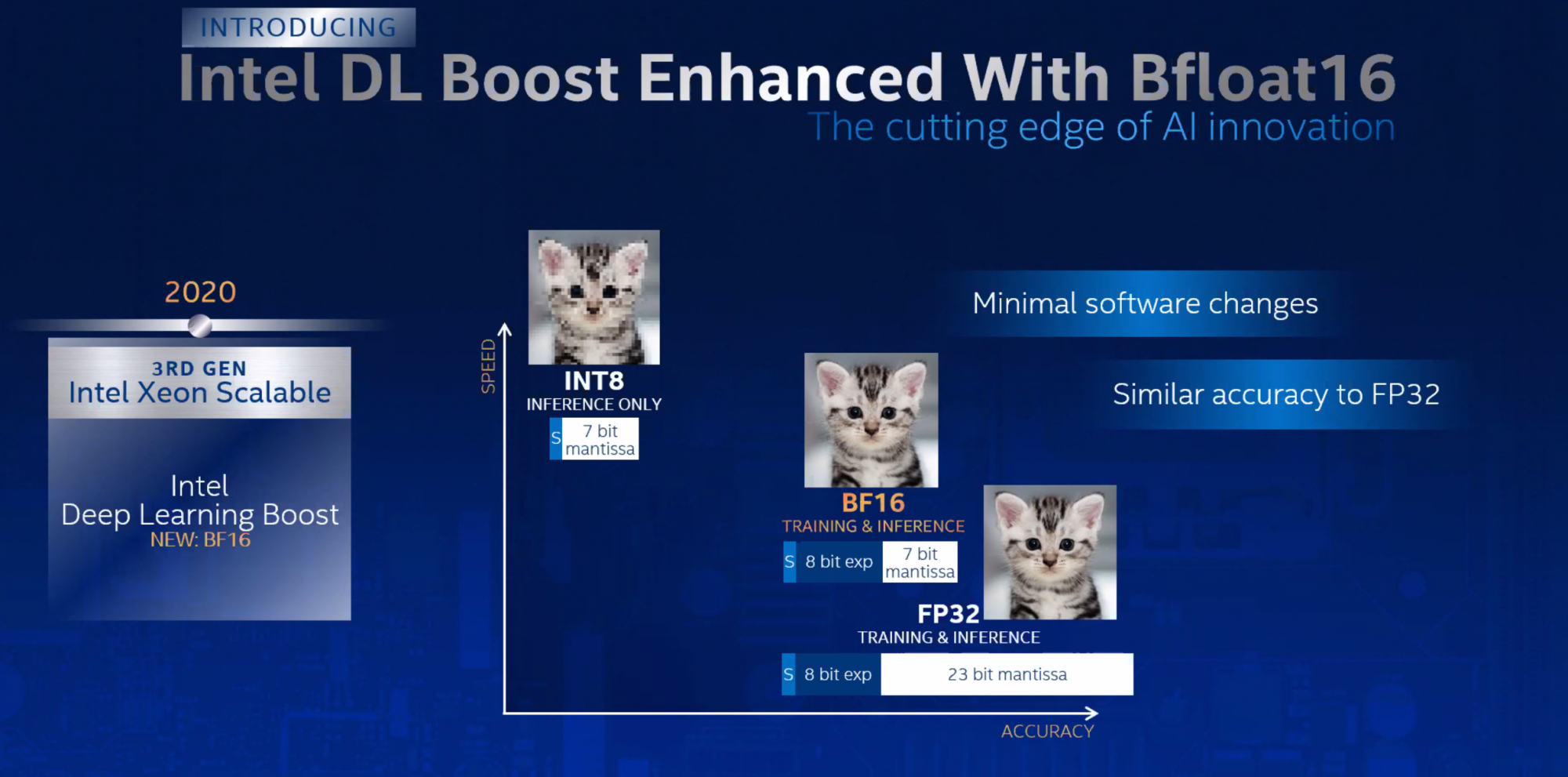

Like last year, Intel is very much focused on improving the AI performance of its chips. The 3rd gen Xeon processors continue to build on the Deep Learning Boost (DL Boost) feature by introducing brain floating16 extensions and vector neural network instructions (VNNI).

Bfloat16, or brain floating point 16 numbers, strikes a middle ground between 8bit integers (int8) and 32bit floating-point numbers (FP32), with the former ideal for fast volume processing and the latter for high precision. Due to its length, processing 16-bit floating-point numbers is faster than 32 bit floating-point numbers and more precise than 8-bit integers. Intel said during the presentation that bfloat16 provides nearly the same accuracy as FP32 in AI image inferencing while being just a bit slower than int8. The company expects that bfloat16 requires will require “very minimal” software changes for customers to reap its benefits.

VNNI, on the other hand, are instructions that improve neural network loops. Although it only encompasses a handful instructions, the operations they dictate, mainly ones that multiply two floating-point values together and combining the result in a 32-bit accumulator, is prevalently used in neural networks used for image analysis.

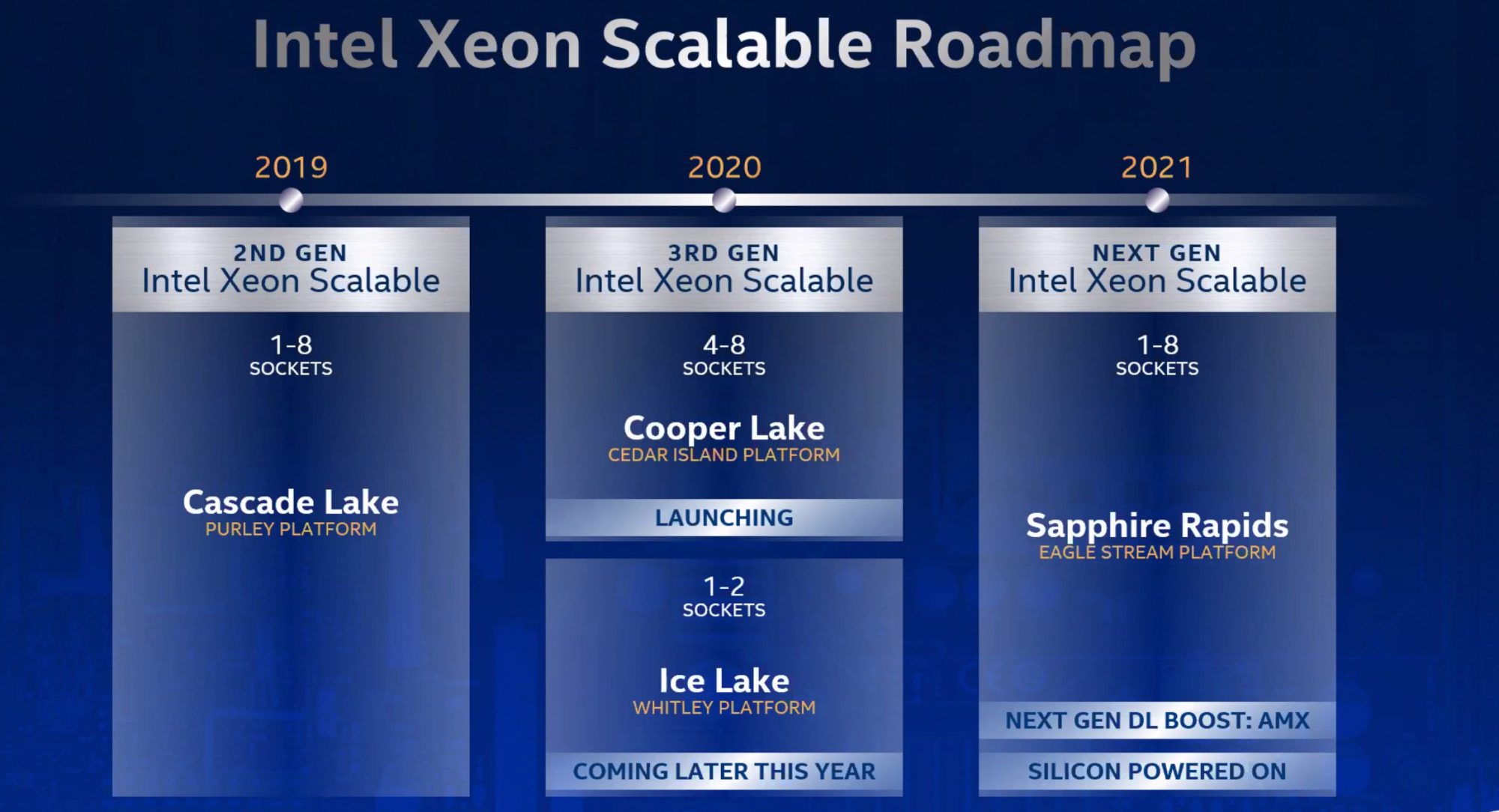

Through Intel’s Ultra Path Interconnect, Cooper Lake processors can be scaled between 4 to 8 sockets. During development, Intel had contemplated the merit of releasing Cooper Lake to dual-socket platforms, but ultimately reversed the decision due to it being too close to the release timing of the Ice Lake Xeon processors, which is expected to land later this year.

Intel claims that the enhanced DL Boost using bloat16 can reach up to 1.98 times higher database performance and provides a 1.9 times performance uplift overall compared to its 5-year-old platform.

In addition to announcing Cooper Lake, Intel also announced that Sapphire Rapids, intel’s next-gen Xeon platform, has completed its powered-on test. Sapphire Rapids will bring Intel Advanced Matrix Extensions, or AMX, for improved matrix calculation performance. AMX specifications will be published later this month for developers.