AMD has launched its RX 6000 series of graphics cards based on the revised RDNA architecture.

Three new cards were launched at its virtual launch presentation on Oct. 28: the Radeon RX 6800, Radeon RX 6800 XT, and the Radeon RX 6900 XT.

| Model | AMD RX 6900 XT | AMD RX 6800 XT | AMD RX 6800 |

| Compute units | 80 | 72 | 60 |

| Boost/Game frequency | 2250MHz / 2015MHz | 2250MHz / 2015MHz | 2105MHz / 1815 MHz |

| ROPs | 128 | 128 | 96 |

| Infinity cache | 128MB | 128MB | 128MB |

| Memory | 16GB GDDR6 | 16GB GDDR6 | 16GB GDDR6 |

| Ray Accelerators | 80 | 72 | 60 |

| Power connector | 2 x 8 pin | 2 x 8 pin | 2 x 8 pin |

| GPU power | 300W | 300W | 250W |

| Price | US$999 | US$649 | US$579 |

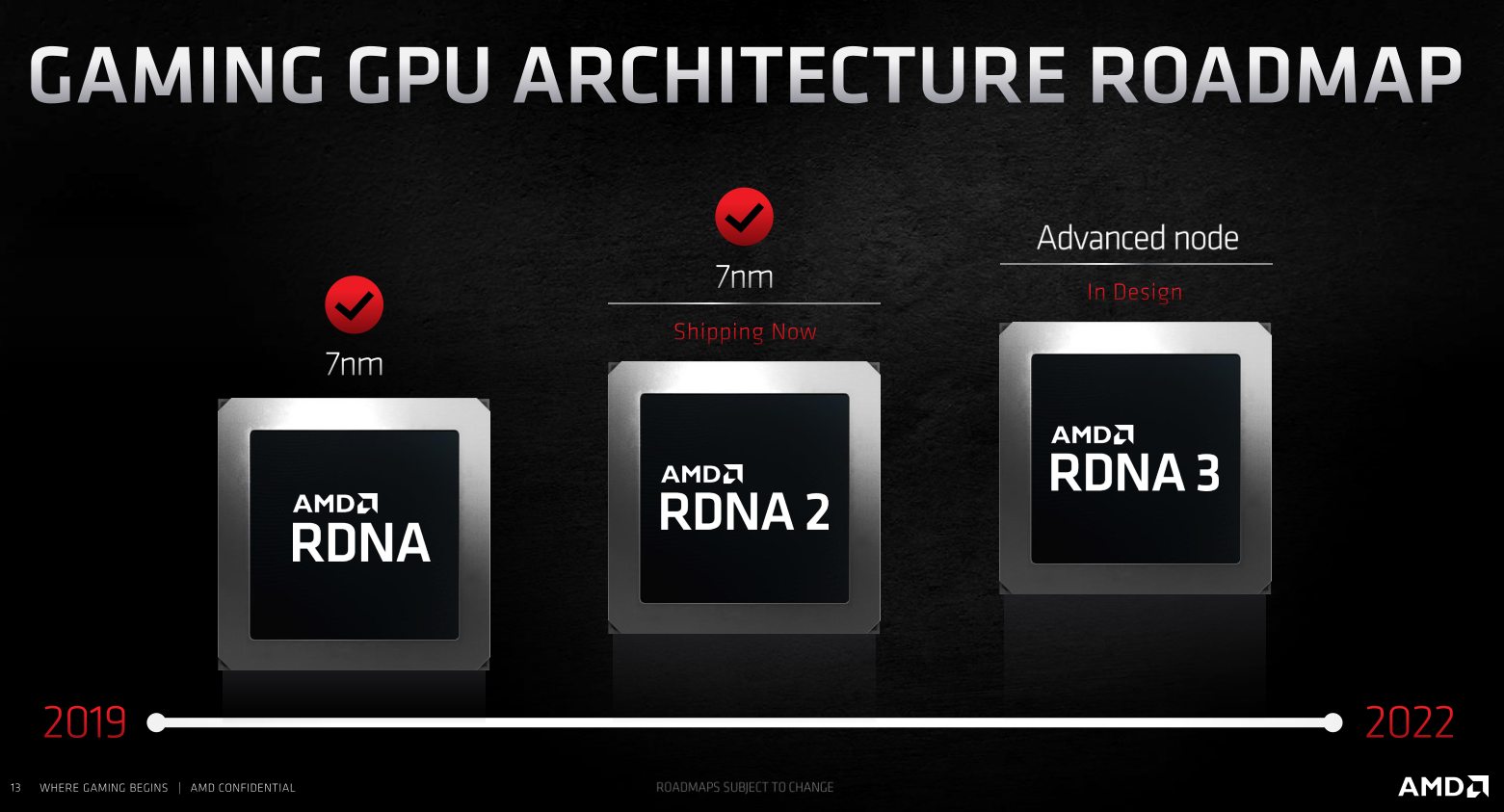

All three cards are based on the RDNA 2 architecture, an improved version of AMD’s RDNA architecture used by the Radeon RX 5000 series graphics cards. Compared to the first iteration, RDNA 2 offers 54 per cent higher performance per watt despite being built on the same 7nm node by TSMC. At 4K resolution, AMD claims that RDNA 2 offers roughly twice the performance of RDNA.

Debuting with RDNA 2 is AMD’s new Infinity Cache, a new slice of 128MB cache memory installed on the GPU that’s accessible by all cores. AMD claims that Infinity Cache acts as a “massive bandwidth amplifier” and raises the effective memory bandwidth to 1664GB/s, 2.4 times higher than the standard GDDR6 memory onboard. For reference, the Radeon Vega VII, which used stacks of high-bandwidth memory (HBM) and a 4096-bit memory bus, achieved a memory bandwidth of 1024GB/s.

AMD doubling down on boosting memory bandwidth could be seen as a way to keep the GPU running at its peak. As Anand Tech explained in its Nvidia Turing architecture deep dive, memory bandwidth does not improve at the same pace as semiconductor density. This was one of the reasons AMD opted to use the expensive HBM2 memory for its Vega VII graphics card.

Now back to the design at hand. With the first RDNA cards, AMD nearly doubled their graphics memory bandwidth (compared to its older Graphics Core Next cards) by using a wider 384-bit memory bus and the faster GDDR6X memory. But with RDNA 2, AMD reverted to using a narrower 256-bit wide bus and the slower GDDR6 memory. Therefore, AMD is likely banking on Infinity Cache to alleviate any bottleneck that could hamper the performance of its new Ray Accelerator, a new dedicated ray trace unit added to the RDNA 2’s compute unit.

Smart Access Memory is another memory enhancement that can increase performance. The caveat is that it can only be enabled by pairing an RX 6000 series graphics card with an AMD Ryzen 5000 series processor. Once enabled, the processor would have “full access” to the GPU memory. AMD said that when combined with the new Rage Mode one-click overclocking feature, gamers could squeeze out an extra two to 13 percent performance for free. During the presentation, AMD only mentioned Smart Access Memory with the 500 series chipset motherboards. It’s not clear whether the 400 series chipset motherboards would receive it as well.

During the presentation, AMD CEO Lisa Su said that a super-resolution feature is in the works to “give gamers an option for more performance when using ray tracing.” Su did not announce when it will be available.

The RX 6000 series graphics cards bring support for Microsoft’s DirectX 12 Ultimate graphics API. It aims to increase visual fidelity and processing efficiency through four key technologies:

- DirectX Raytracing: an advanced ray-tracing technique that produces more realistic lighting and shadows.

- Variable rate shading: renders different parts of an image at different detail levels.

- Mesh shaders: A new shader pipeline design that makes shaders more flexible and efficient.

- Sampler feedback: A feature that better predicts what graphical resources should be loaded next.

By improving power efficiency using holistic design optimizations, AMD was able to limit the total board power of its RX 6000 series graphics cards to 300W. According to the presentation slides, AMD targeted a 650W power supply to be the baseline power requirement. That may be enough to run the RX 6800, but AMD recommends a 750W unit of the RX 6800XT and 850W for the RX 6900 XT.

For the most part, AMD’s carefully-crafted presentation strongly alludes that the Radeon RX 6000 series will deliver better value than its green competitor. For graphics cards, value isn’t a simple conversation of pricing; performance at the targeted resolution still plays into the equation.

First up is the Radeon RX 6800, the most affordable option at US$579. AMD compared the Radeon RX 6800 to the Nvidia RTX 2080 Ti, Nvidia’s former flagship card built using the Nvidia Turing architecture. AMD’s presentation slides showed a convincing victory for the RX 6800 at 1440p and a solid lead at 4K. Based on preliminary reviews by various outlets, the RTX 2080 Ti roughly equates to the performance of the Nvidia GeForce RTX 3070 launching on Oct. 29, so AMD’s victory here could very well translate to a win against Nvidia’s midrange contender. Do note, however, that the RTX 3070 is US$79 cheaper and only comes with 8GB of memory.

Next up is the Radeon RX 6800 XT at $649, which squared off against Nvidia’s current-gen GeForce RTX 3080 graphics card. Not only did it show some seriously competitive numbers against Nvidia’s product, but it did so at a lower power profile (which AMD poignantly noted by enclosing it in bold brackets). It also undercuts Nvidia’s card by $50, increasing the value proposition ever so slightly.

Saving the best for last, AMD pitted its top-shelf Radeon RX 6900XT against Nvidia’s flagship GeForce RTX 3090 graphics card. With both the Rage mode overclocking and Smart Access Memory enabled, the RX 6900 XT traded blows against Nvidia’s best at 4K resolution. But at $999, the RX 6900 XT significantly undercuts the RTX 3090 Founders Edition by $500.

The Radeon RX 6800 and Radeon RX 6800 XT will be available globally on Nov. 18. The Radeon RX 6900 will be available on Dec. 8. AMD has promised that it would take every measure it can to prevent scalper bots from instantly draining inventory at launch–a nightmare scenario that struck Nvidia when it launched its GeForce RTX 3000 series graphics cards.